Code reviews are one of the most important elements of software development. They're where we seek feedback on what we've built, looking to ensure it's understandable, elegant, and free from defects.

A problem with code reviews is that we often need to wait for someone else to have time to look at what we've done. Ideally, we want instant feedback before we submit a PR.

In a previous article (see: Code reviews using Metaphor), I looked at how to build something that could leverage AI to help, but that required a lot of manual steps. We really need a tool for this.

Anatomy of a code review tool

If we want to build an AI-based code reviewer, we should start with some features we'd like it to have.

Ideally, we want something we can integrate with other tools. That implies we want it to run from a command line. Command-line apps need argument flags. We also want to provide a list of files to review.

We also want it to run everywhere, so let's build it in Python.

Every language, project, company, etc., has different approaches to coding conventions, so we want our code review guidelines to be customizable. As we may have code in multiple languages, let's allow for multiple guidelines, too.

We'll take a Unix-like approach and design our code review tool to generate a large language model (LLM) prompt as a file but not provide integration with any specific LLM. That can be done manually by the user or via a separate prompt upload or interaction tool, which means it can also work with local LLMs, not just cloud-based ones.

Building the prompt

The trickiest part of this is planning to build a prompt. Our AI isn't psychic, and we don't want it to get creative and come up with new ideas for reviewing code each time. We solve this by constructing a large context prompt (LCP) that contains all the information it needs to do the task.

This means the prompt needs:

- All the files to review

- All the coding guidelines we want to apply to those files

- Some instructions on what we want it to do

- Some instructions on how we want it to generate its output

There's a library available that makes this very simple, m6rclib. This is an embedded parser for a structured document language, Metaphor (see https://github.com/m6r-ai/m6rclib). m6rclib is well suited to this problem:

- Metaphor files are largely natural language and so fit nicely with describing coding guidelines

- It has an `Include:` keyword that lets us compose a series of files into one prompt

- It has an `Embed:` keyword that lets us embed files into a prompt

- It has `Role:`, `Context:` and `Action:` keywords that let us describe the role of the LLM, the context we want it to use, and the action we want it to take.

We stitch together all the elements we want into an overall Metaphor description and let the prompt compiler do the rest!

Coding guidelines

Let's look at a fragment of a coding guideline. This one is a generic guide in Metaphor form. Some sub-points probably want to be expanded, which will likely give us a better review, but these are pretty workable. Some of these may also be too language-specific and want refactoring, but that's easy to do in the future. Similarly, some of these may not be universally accepted. I'm hoping the tool's users will help with this!

Context: Generic code review guidelines

Here are a series of guidelines I would like you to consider when you're reviewing software.

Context: Architecture and design

The software should follow SOLID principles:

- Single Responsibility Principle: Each class/function does one thing well.

- Open/Closed Principle: Open for extension, closed for modification.

- Liskov Substitution Principle: Derived classes must be substitutable for base classes.

- Interface Segregation: Keep interfaces small and focused.

- Dependency Inversion: Depend on abstractions, not concrete implementations.

In addition, the software should:

- Use composition over inheritance when possible.

- Keep coupling low between modules

- Make dependencies explicit (avoid hidden side effects)

- Use dependency injection for better testing and flexibility

- DRY (Don't Repeat Yourself): Eliminate code duplication by abstracting common functionality.

- KISS (Keep It Simple, Stupid): Strive for simplicity in design and implementation.

- YAGNI (You Aren't Gonna Need It): Avoid adding functionality until it is necessary.

Context: Security Best Practices

The software should:

- Sanitize all user inputs.

- Use secure defaults.

- Never store sensitive data in code.

- Use environment variables or command line parameters for configuration.

- Implement proper error handling and logging.

- Use latest versions of dependencies.

- Follow principle of least privilege.

- Validate all external data.

- Implement proper authentication and authorization mechanisms.

- Review dependencies to address known vulnerabilities.

- Encrypt sensitive data at rest and in transit.

- Apply input validation on both client and server sides.

Context: Error handling

The software handles error conditions well:

- Detect and handle all exception or failure conditions.

- Provide meaningful error messages.

- Log errors appropriately.

- Handle resources properly.

- Fail fast and explicitly.

...

Building commit-critic

At this point, we've got a design, so now we want to build the tool. We could dive in and start coding, but wouldn't it be better to have an AI do that part, too? Having it AI-built has a lot of benefits:

- It's much quicker to build the code (LLMs "type" much faster than people!)

- It will do all the boring stuff (exception handling, etc.) without complaining

- If it knows enough to build the tool, then it can write the user manual

- If we want tests, it can build them

- We can rapidly try new ideas and discard them if they aren't useful

- It can do all the future maintenance

Some of these might sound far-fetched. Hold that thought, and we'll come back to it later.

More Metaphor

commit-critic leverages Metaphor to create LLM prompts at runtime, but Metaphor was initially designed to help me build software using AI. To support this, I wrote a stand-alone Metaphor compiler, m6rc (see https://github.com/m6r-ai/m6rc). Aside: m6rc used to be quite heavyweight but is now a very light wrapper around m6rclib, too.

If we take and expand on what we have already looked at, we can describe commit-critic in Metaphor. Importantly, we're describing what we want the tool to do - i.e. the business logic. We're not describing the code!

Role:

You are a world-class python programmer with a flair for building brilliant software

Context: commit-critic application

commit-critic is a command line application that is passed a series of files to be reviewed by an AI LLM.

Context: Tool invocation

As a developer, I would like to start my code review tool from the command line, so I can easily

configure the behaviour I want.

Context: Command line tool

The tool will be run from the command line with appropriate arguments.

Context: No config file

The tool does not need a configuration file.

Context: "--output" argument

If the user specifies a "-o" or "--output" argument then this defines the file to which the output prompt

should be generated.

Context: "--help" argument

If the user specifies a "-h" or "--help" argument then display a help message with all valid arguments,

any parameters they may have, and their usage.

Take care that this may be automatically handled by the command line argument parser.

Context: "--guideline-dir" argument

If the user specifies a "-g" or "--guideline-dir" argument then use that as part of the search path that is

passed to the Metaphor parser. More than one such argument may be provided, and all of them should be passed

to the parser.

Context: "--version" argument

If the user specifies a "-v" or "--version" argument then display the application version number (v0.1 to

start with).

Context: default arguments

If not specified with an argument flag, all other inputs should be assumed to be input filenames.

Context: Check all arguments

If the tool is invoked with unknown arguments, display correct usage information.

Context: Check all argument parameters

The tool must check that the form of all parameters correctly matches what is expected for each

command line argument.

If the tool is invoked with invalid parameters, display correct usage information.

Context: Error handling

The application should use the following exit codes:

- 0: Success

- 1: Command line usage error

- 2: Data format error (e.g. invalid Metaphor syntax)

- 3: Cannot open input file

- 4: Cannot create output file

Error messages should be written to stderr and should include:

- A clear description of the error

- The filename if relevant

- The line number and column if relevant for syntax errors

Context: Environment variable

The environment variable "COMMIT_CRITIC_GUIDELINE_DIR" may contain one or more directories that will be scanned

for guideline files, similar to the `--guideline-dir` command line argument, except multiple directories may be

specified in the environment variable. The path handling should match the default behaviour for the operating

system on which the application is being run.

Context: Application logic

The application should take all of the input files and incorporate them into a string that represents the

root of a metaphor file set.

This root will look like this:

```metaphor

Role:

You are an expert software reviewer, highly skilled in reviewing code written by other engineers. You are

able to provide insightful and useful feedback on how their software might be improved.

Context: Review guidelines

Include: [guide files go here]

Action: Review code

Please review the software described in the files provided here:

Embed: [review files go here]

I would like you to summarise how the software works.

I would also like you to review each file individually and comment on how it might be improved, based on the

guidelines I have provided. When you do this, you should tell me the name of the file you believe may want to

be modified, the modification you believe should happen, and which of the guidelines the change would align with.

If any change you envisage might conflict with a guideline then please highlight this and the guideline that might

be impacted.

The review guidelines include generic guidance that should be applied to all file types, and guidance that should

only be applied to a specific language type. In some cases the specific guidance may not be relevant to the files

you are asked to review, and if that's the case you need not mention it. If, however, there is no specific

guideline file for the language in which a file is written then please note that the file has not been reviewed

against a detailed guideline.

Where useful, I would like you to write new software to show me how any modifications should look.

```

If you are passed one or more directory names via the `--guideline-dir` argument or via the

COMMIT_CRITIC_GUIDELINE_DIR environment variable then scan each directory for `*.m6r` files. If no directories

were specified then scan the current working directory for the `*.m6r` files.

In the root string, replace the `Include: [guide files go here]` with one line per m6r file

you discover, replacing the "[guide files go here]" with the matching path and filename. If you do not find

any guideline files then exit, reporting an appropriate error.

In the root string, replace the `Embed: [review files go here]` with one line per input, replacing the

"[review files go here]" with the input files provided on the command line.

You need to pay very close attention to the indentation of the Metaphor code block you have just seen as that

needs to be replicated in the output.

Once you have the string representation of the root of the Metaphor file set you must call the Metaphor parser,

passing in the string so that it can be compiled into the AST. There are format functions in the m6rclib

package that will handle generation of an output prompt and to output exceptions in the event of parser failures.

Context: Metaphor (.m6r) file parsing

The metaphor code review files are to be processed using the m6rclib library. The source code for the

library is presented here:

Embed: ../m6rclib/src/m6rclib/*.py

Context: Python implementation and dependencies

As an engineer working with the application, I want the application to be easy to use and understand,

so I can maintain and enhance it over time.

Context: Implement in Python 3

The application will be written in the latest version of Python 3.

Context: Indentation of code

Code must be indented by 4 spaces.

Context: Use docstrings

Use docstrings to describe all modules, classes, and functions. This should follow PEP 257 guidelines.

Context: Use type hints

Use type hints for function arguments and return values.

Context: Use comments

Use additional comments to describe any complex logic.

Context: PEP 8 imports

The import list in any module should follow PEP 8 guidelines, including the ordering of imports.

Context: Avoid unnecessary elif and else statements

To improve readability, do not use elif or else statements if the preceding statement returns.

For example, do this:

```

if condition:

return

next_statement()

```

instead of this:

```

if condition:

return;

else:

next_statement()

```

Context: Dependencies

Leverage standard library tools before custom solutions, unless specifically instructed.

Context: Exception handling

Use specific exceptions instead of bare except.

Action: Build the software

# Please review the requirements provided in the Context section and assess if they are clear and unambiguous. If

# anything is unclear then please suggest potential improvements. Please suggest edits to the Metaphor context where

# these may be appropriate.

#

# Take care to review all the behaviours asked for and do not omit anything.

Please review the requirements provided in the Context section and build the software described. Take care to

address all the behaviours asked for and do not omit anything.

Do not produce any other commentary other than the code.

If the software should be structured into multiple files then please provide each file separately and identify the

name of each one as you produce it.

An earlier version of the application is provided here. Please use this as a template. This version may not meet

all the requirements provided in the Context section, so you may need to add or remove code to meet the full set of

requirements specified.

Embed: src/commit_critic/commit_critic.py

Generating the executable code

We can compile this into a prompt ready to hand to our LLM:

m6rc commit-critic.m6r -o out.lcpIn this instance I handed the task to Claude 3.5 Sonnet:

#!/usr/bin/env python3

# Copyright 2024 M6R Ltd.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

"""

commit-critic - A command line tool for AI-assisted code reviews.

This module provides the main entry point for the commit-critic application,

which processes source files for AI-based code review using the Metaphor

language format.

The tool accepts input files and generates a properly formatted prompt

that can be used with AI systems to perform code reviews. It supports

various command line arguments for configuration and uses the Metaphor

language format for structuring the review request.

"""

import argparse

import os

import sys

from dataclasses import dataclass

from pathlib import Path

from typing import List, Optional

from m6rclib import (

MetaphorASTNode,

MetaphorParser,

MetaphorParserError,

format_ast,

format_errors,

)

@dataclass

class ReviewConfiguration:

"""Configuration settings for the review generator.

Attributes:

output_file: Optional file path for output

guideline_paths: List of paths to search for guidelines

input_files: List of input files to review

version: Version string of the application

"""

output_file: Optional[str]

guideline_paths: List[str]

input_files: List[str]

version: str = "v0.1"

class MetaphorReviewGenerator:

"""Handles the generation of code reviews using Metaphor templates."""

def __init__(self, config: ReviewConfiguration):

"""Initialize the review generator.

Args:

config: Configuration settings for the review

"""

self.config = config

self.guidelines: List[str] = []

self.parser = MetaphorParser()

def _get_env_guideline_paths(self) -> List[str]:

"""Get guideline paths from environment variable.

Returns:

List of paths from COMMIT_CRITIC_GUIDELINE_DIR environment variable

"""

env_paths = os.getenv("COMMIT_CRITIC_GUIDELINE_DIR", "")

if not env_paths:

return []

return [p.strip() for p in env_paths.split(os.pathsep) if p.strip()]

def find_guideline_files(self, paths: Optional[List[str]]) -> List[str]:

"""Find all .m6r files in the specified paths.

Args:

paths: List of paths to search, or None to use current directory

Returns:

List of discovered .m6r files

Raises:

SystemExit: If no guideline files are found or on permission errors

"""

search_paths = []

# Add environment variable paths

env_paths = self._get_env_guideline_paths()

if env_paths:

search_paths.extend(env_paths)

# Add command line paths if specified

if paths:

search_paths.extend(paths)

# Use current directory if no paths specified

if not search_paths:

search_paths = ['.']

guidelines = []

for path in search_paths:

try:

path_obj = Path(path)

if not path_obj.exists():

sys.stderr.write(f"Warning: Path does not exist: {path}\n")

continue

if not path_obj.is_dir():

sys.stderr.write(f"Warning: Path is not a directory: {path}\n")

continue

guidelines.extend(path_obj.glob('*.m6r'))

except PermissionError as e:

sys.stderr.write(f"Error: Permission denied accessing path {path}: {e}\n")

sys.exit(2)

if not guidelines:

sys.stderr.write(

f"Error: No .m6r files found in search paths: {', '.join(search_paths)}\n"

)

sys.exit(2)

return [str(p) for p in guidelines]

def validate_files(self, files: List[str]) -> None:

"""Validate that all input files exist and are readable.

Args:

files: List of files to validate

Raises:

SystemExit: If any file cannot be accessed

"""

for file in files:

path = Path(file)

if not path.is_file():

sys.stderr.write(f"Error: Cannot open input file: {file}\n")

sys.exit(3)

if not os.access(path, os.R_OK):

sys.stderr.write(f"Error: No read permission for file: {file}\n")

sys.exit(3)

def create_metaphor_content(self, guidelines: List[str], files: List[str]) -> str:

"""Create the Metaphor content string.

Args:

guidelines: List of guideline files to include

files: List of files to review

Returns:

String containing the complete Metaphor content

"""

include_lines = '\n'.join(f' Include: {g}' for g in guidelines)

embed_lines = '\n'.join(f' Embed: {f}' for f in files)

return f"""Role:

You are an expert software reviewer, highly skilled in reviewing code written by other engineers. You are

able to provide insightful and useful feedback on how their software might be improved.

Context: Review guidelines

{include_lines}

Action: Review code

Please review the software described in the files provided here:

{embed_lines}

I would like you to summarise how the software works.

I would also like you to review each file individually and comment on how it might be improved, based on the

guidelines I have provided. When you do this, you should tell me the name of the file you believe may want to

be modified, the modification you believe should happen, and which of the guidelines the change would align with.

If any change you envisage might conflict with a guideline then please highlight this and the guideline that might

be impacted.

The review guidelines include generic guidance that should be applied to all file types, and guidance that should

only be applied to a specific language type. In some cases the specific guidance may not be relevant to the files

you are asked to review, and if that's the case you need not mention it. If, however, there is no specific

guideline file for the language in which a file is written then please note that the file has not been reviewed

against a detailed guideline.

Where useful, I would like you to write new software to show me how any modifications should look."""

def write_output(self, content: str, output_file: Optional[str]) -> None:

"""Write content to the specified output file or stdout.

Args:

content: Content to write

output_file: Optional output file path

Raises:

SystemExit: If output file cannot be written

"""

if output_file:

try:

with open(output_file, 'w', encoding='utf-8') as f:

f.write(content)

except OSError as e:

sys.stderr.write(f"Error: Cannot create output file {output_file}: {e}\n")

sys.exit(4)

return

sys.stdout.write(content)

def validate_and_prepare(self) -> None:

"""Validate input files and prepare guidelines.

Raises:

SystemExit: If validation fails

"""

if not self.config.input_files:

sys.stderr.write("Error: No input files specified\n")

sys.exit(1)

self.validate_files(self.config.input_files)

self.guidelines = self.find_guideline_files(self.config.guideline_paths)

def generate_review(self) -> None:

"""Generate the code review.

Raises:

SystemExit: If review generation fails

"""

content = self.create_metaphor_content(self.guidelines, self.config.input_files)

try:

ast: MetaphorASTNode = self.parser.parse(

content,

"<generated>",

self.config.guideline_paths or ["."]

)

self.write_output(format_ast(ast), self.config.output_file)

except MetaphorParserError as e:

sys.stderr.write(format_errors(e.errors))

sys.exit(2)

def parse_arguments() -> ReviewConfiguration:

"""Parse and validate command line arguments.

Returns:

ReviewConfiguration containing the parsed arguments

"""

parser = argparse.ArgumentParser(

description='Generate AI-assisted code reviews using Metaphor templates'

)

parser.add_argument(

'-o', '--output',

help='Output file for the generated prompt',

type=str

)

parser.add_argument(

'-g', '--guideline-dir',

help='Path to search for Metaphor guideline files',

action='append',

type=str,

dest='guideline_paths'

)

parser.add_argument(

'-v', '--version',

help='Display version information',

action='version',

version='v0.1'

)

parser.add_argument(

'files',

help='Files to review',

nargs='*'

)

args = parser.parse_args()

return ReviewConfiguration(

output_file=args.output,

guideline_paths=args.guideline_paths,

input_files=args.files

)

def main() -> None:

"""Main entry point for the application."""

config = parse_arguments()

generator = MetaphorReviewGenerator(config)

generator.validate_and_prepare()

generator.generate_review()

if __name__ == '__main__':

main()

Testing the output

commit-critic needs a little extra python packaging to run as a stand-along application, but we can test it:

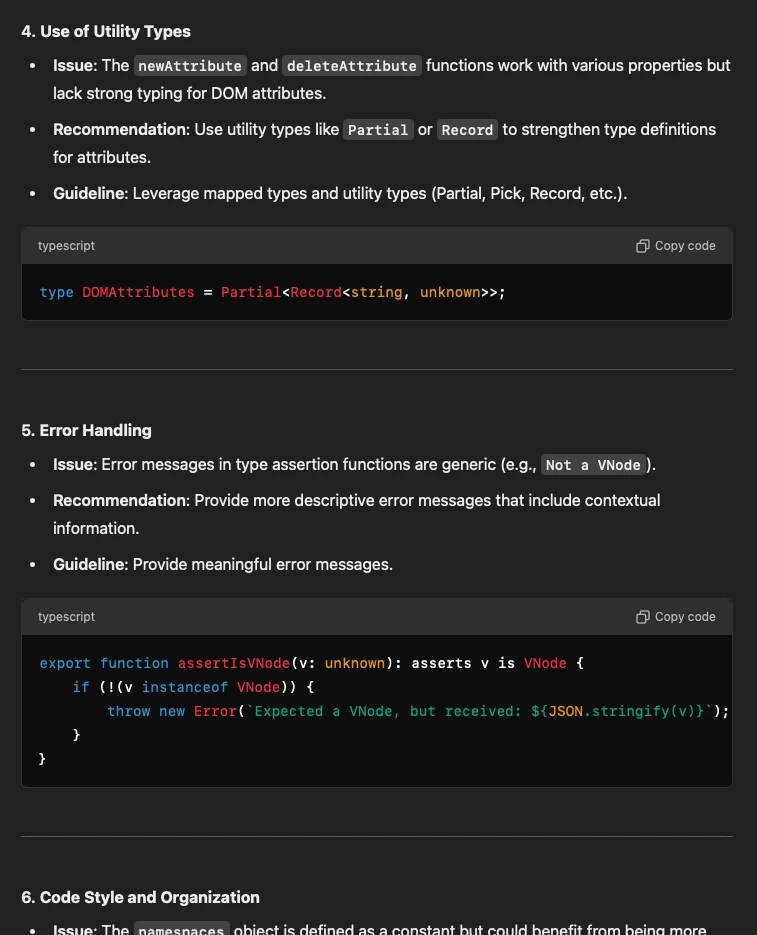

python3 commit-critic.py -g <review-dir-path> -o out.lcp <file-to-test>The following is a fragment of the output from ChatGPT 4o when I asked it to review part of a virtual DOM implementation I build a few months ago. As you can see, it produces a series of recommendations, and tells you which guideline applies. This makes it much easier to understand why it believes a change might be needed, so you can use your own judgement about whether to take the advice or not.

Revisiting the potential benefits of AI-built software

Earlier in this article, I mentioned some potential benefits of AI-built software. commit-critic isn't the only software I've been designing in the last few weeks, but it demonstrates many of these benefits:

- It's much quicker to build the code (LLMs "type" much faster than people!): you can try this now!

- It will do all the boring stuff (exception handling, etc.) without complaining: you'll see this is all there in the committed code.

- If it knows enough to build the tool, then it can write the user manual: Claude wrote the README.md file on the GitHub repo using a slightly modified version of the Metaphor description

- We can rapidly try new ideas and discard them if they aren't useful: if you poke at the git history, you'll see earlier iterations of commit-critic. Some ideas got dropped, some new ones were added, and the AI coded all the modifications.

- It can do all the future maintenance: you can try this yourself too by changing any of the requirements or by editing the coding guidelines used by commit-critic

The one I didn't mention yet is "If we want tests, it can build them". I didn't build tests for commit-critic yet. However, I did need tests for m6rclib. To give 100% test coverage over statements and branches currently requires just over 1300 lines of unit tests. Claude 3.5 Sonnet wrote and debugged all those in about about 5-6 hours, starting from another Metaphor description.

Sometimes, the future is here already!

The sources are on GitHub

All the code you see here and my initial code guidelines are available on GitHub. The software is open-source under an Apache 2.0 license.

Please see: https://github.com/m6r-ai/commit-critic

Postscript

This is a story about AI, so it wouldn't be complete without telling you the name for the tool came from Claude 3.5 Sonnet after I asked it to come up with some ideas! That conversation wandered down a very entertaining rabbit hole for 5 minutes, and I'm still wondering when I'll get around to designing something called "Debugsy Malone". Who says AIs can't have a sense of humour too?