Metaphor is designed to help you build software, but a key part of the software lifecycle is reviewing what we've built to make sure it does what we expect it to do. So, how do we get Metaphor to help with this problem?

Creating a code review engine

Our starting point is to recognize this problem is just another variant on a problem where we want to ask a large language model (LLM) AI to take on a role, given some relevant context, and some actions we'd like it to perform. This is exactly the sort of problem Metaphor is designed to solve. It's also designed to solve it in a way that lets us try ideas quickly, build on ones that work well, and quickly discard ones that don't.

Define a role

The Metaphor syntax allows us to specify a role that we'd like our LLM to fulfil. In this case we're looking for it to be an expert code reviewer. We can therefore start with something like this:

Role: Code reviewer

You are an expert software engineer, highly skilled in reviewing code

written by other engineers. You are able to provide highly insightful and

useful feedback on how their software might be improved.We might be able to improve on this in the future, but it sets the scene.

Define some code review roles

The next thing needed is some rules against which to review our code. This is quite subtle because the LLM training data invariably contains a lot of information about what makes for good code. There are a few problems with this:

- Any given LLM probably has too much information and won't be consistent about what it looks for.

- Different LLMs will look for different things.

- The things the LLMs might want to look for may not match things that we really care about. For example, they won't know about any project or company specific rules.

One thing we can do, however, is ask our available AIs what they consider to be important code review rules using an interactive session with them.

When I did this with Claude 3.5 Sonnet, here's a fragment of what it replied:

- Code Style and Readability

- Follow PEP 8 style guide consistently

- Use meaningful variable and function names (descriptive_name vs x)

- Keep functions focused and under 50 lines when possible

- Use type hints for function arguments and return values

- Break complex expressions into well-named variables

- Limit line length to 79-88 characters

- Use consistent indentation (4 spaces, not tabs)

- Add docstrings for modules, classes, and functions

- Include examples in docstrings for complex functionality

- Architecture and Design

- Follow SOLID principles

- Use composition over inheritance when possible

- Keep coupling low between modules

- Make dependencies explicit (avoid hidden side effects)

- Use dependency injection for better testing and flexibility

- Performance Considerations

- Use appropriate data structures (dict for lookups, set for uniqueness)

- Avoid unnecessary list/dict comprehensions inside loops

- ...

Define what we want to review

Within our top-level Context: block we also need to say what it is we'd like our LLM to review. We can do this with the Metaphor Embed: keyword. As of v0.2, Embed: can also take wildcards, so this is easier to do!

Context: Files

The following files form the software I would like you to review:

# Replace this next line with the files you would like to review.

Embed: ../m6rc/src/m6rc/*.pyIn this instance we're going to review all the Python source files in the Metaphor compiler, m6rc.

Define the action we'd like the AI to take

Our Action: block simply needs to describe what the output should look like:

Action: Review code

Please review the software described in the files I provided to you.

I would like you to summarise how the software works.

I would also like you to review each file individually and comment on how

it might be improved. When you do this, you should tell me the name of the

file you're reviewing, and the modification you believe should happen. Where

useful, I would like you to write new software to show me how those

modifications should look.Strictly, we don't need the "summarise" request, but it's useful to see if the AI understood the software.

Running the code

To make this easier, I posted the source code on GitHub. You can find it at:

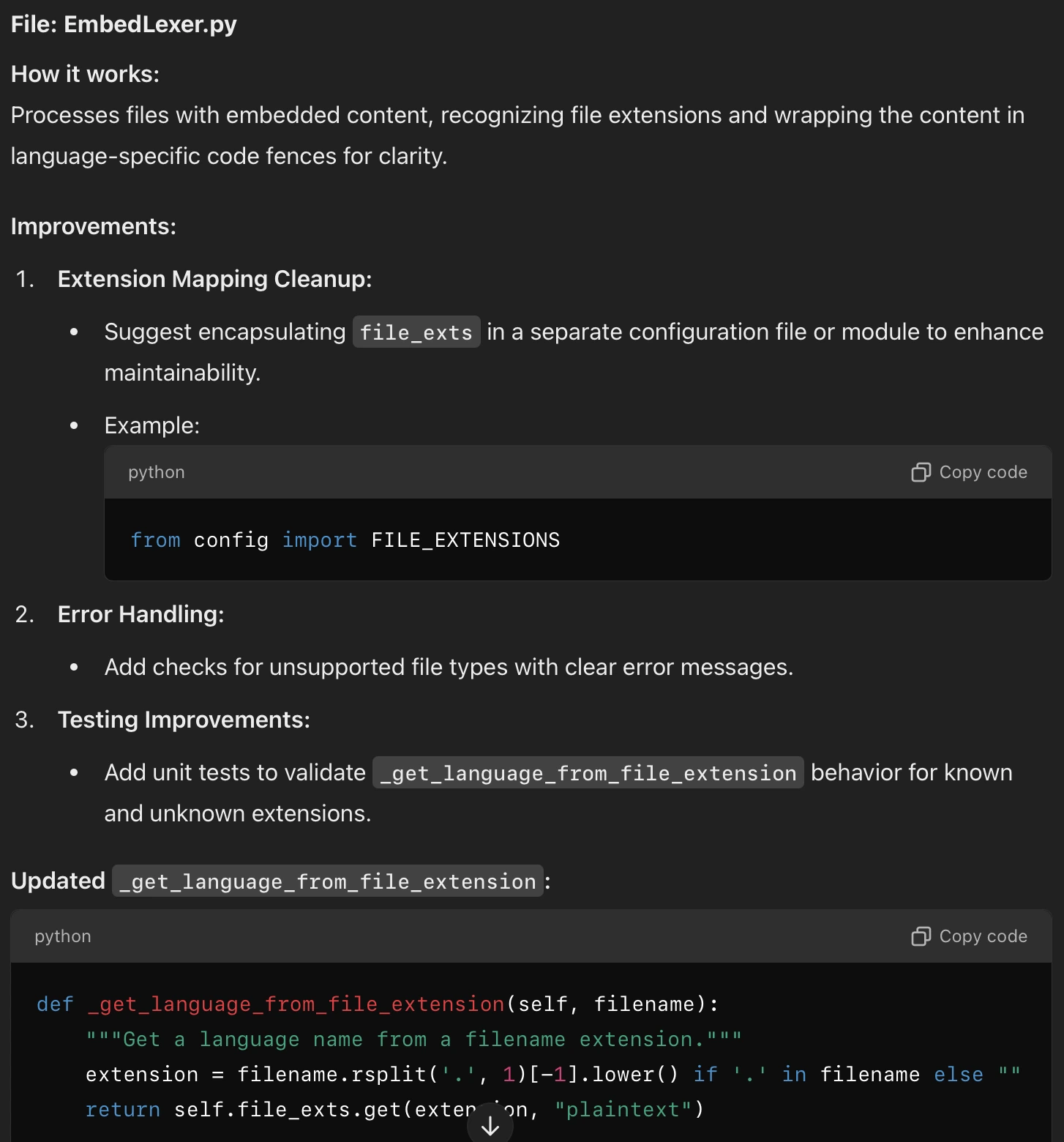

https://github.com:/m6r-ai/demo-code-reviewThe output is too long to post here, but here's a snapshot from the middle of what ChatGPT 4o generated:

It turns out there were quite a lot of really good suggestions to improve this software!

What next?

We've seen how we can use Metaphor to build the core of a code reviewing engine.

Given Metaphor's design, we can also evolve the code review capabilities over time. If we want to add new review guidelines we can simply update the relevant .m6r files and they're available the next time we go to review our code.

As an example, after I got the first working reviews I wrote another Metaphor file that passed in the code review suggestions from Clause 3.5 Sonnet, and asked ChatGPT o1 to suggest improvements. I merged these into the version you'll see in the git repo.

Why don't you give this a try?

I'm planning to keep adding to the review guidelines in the git repo. If you've got suggestions to improve the current ones, or new ones you'd like to add (perhaps for different languages) then please do reach out or submit a PR.